Detect the use of spam-bots and ban non compliant users

**Is your feature request related to a problem? Please describe.**

Fight against disinformation, spamming and trolls. At the moment, if the administrators do not set a proposals limit per user, it is easy for a malevolent user to create one account and use a tool like Selenium to publish hundreds of contributions. Furthermore, administrators are not able to ban users.

**Describe the solution you'd like**

Implement a way to report users

Like we have a way to flag a contribution for moderation, a similar mechanism can be implemented to flag users and give moderators the ability to block said user. Everyone can participate in this reporting (admin, moderators, users) and flag users based on their harmful behaviour towards the debate or the content they posted on they public profile (avatar, biography, personal website).

- Add a flag to report users on their public profile;

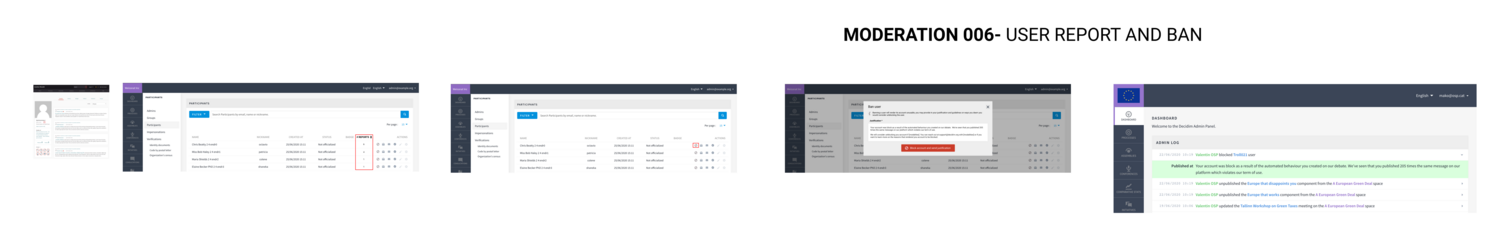

- In the admin, add a column to the participant table that displays the number of time a user was reported and make a sortable column so the admin can see first the ones with most reports and take action (block) if needed;

- Send notification to moderator and admin when a user is reported.

Allow administrators to ban non compliant users

Administrators should be able to ban users, for example when someone repeatedly attack the debate. This ban should be transparent.

Add a “ban” action button in the Participants panel.

- Admin can unban user

- Users will be banned at the Decidim Identities level meaning they cannot access the website with another provider through the EU login. (Ex : I connect with Twitter, got banned I cannot connect using Facebook if it has the same email or is associated to my EU login id)

When a user is banned :

- an attribute (ex: blocked) is added to their profile which makes it impossible for them to login

- its avatar is replaced by the default one

- its pseudo is replaced by “Banned user”

- Profile page is rendered inaccessible by non-admin users (to facilitate moderation based on their contribution history)

- All contribution remain visible

Automate the ban of spamming users

In order to detect those users, we need to define behaviours we want to prevent. For example, we can consider that more than ten messages published in less than one minute from the account justifies that the system automatically blocks the user.

- An asynchronous job could check the database every minute, searching for such behaviour and report or block user.

- The detailed list of behaviours in question should be made public and the code open sourced.

**Describe alternatives you've considered**

Above measure are up to selection / discussion.

**Additional context**

We've seen these behaviour happen in our latest experiences when we scaled it to a couple dozens of thousand users : automated user creation, automated content creation, coordinated mass posting.

**Does this issue could impact on users private data?**

No

**Funded by**

EU Commission

Share

Or copy link